Join Nixi AI as

Bachelor/ Master thesis (m/f/d)

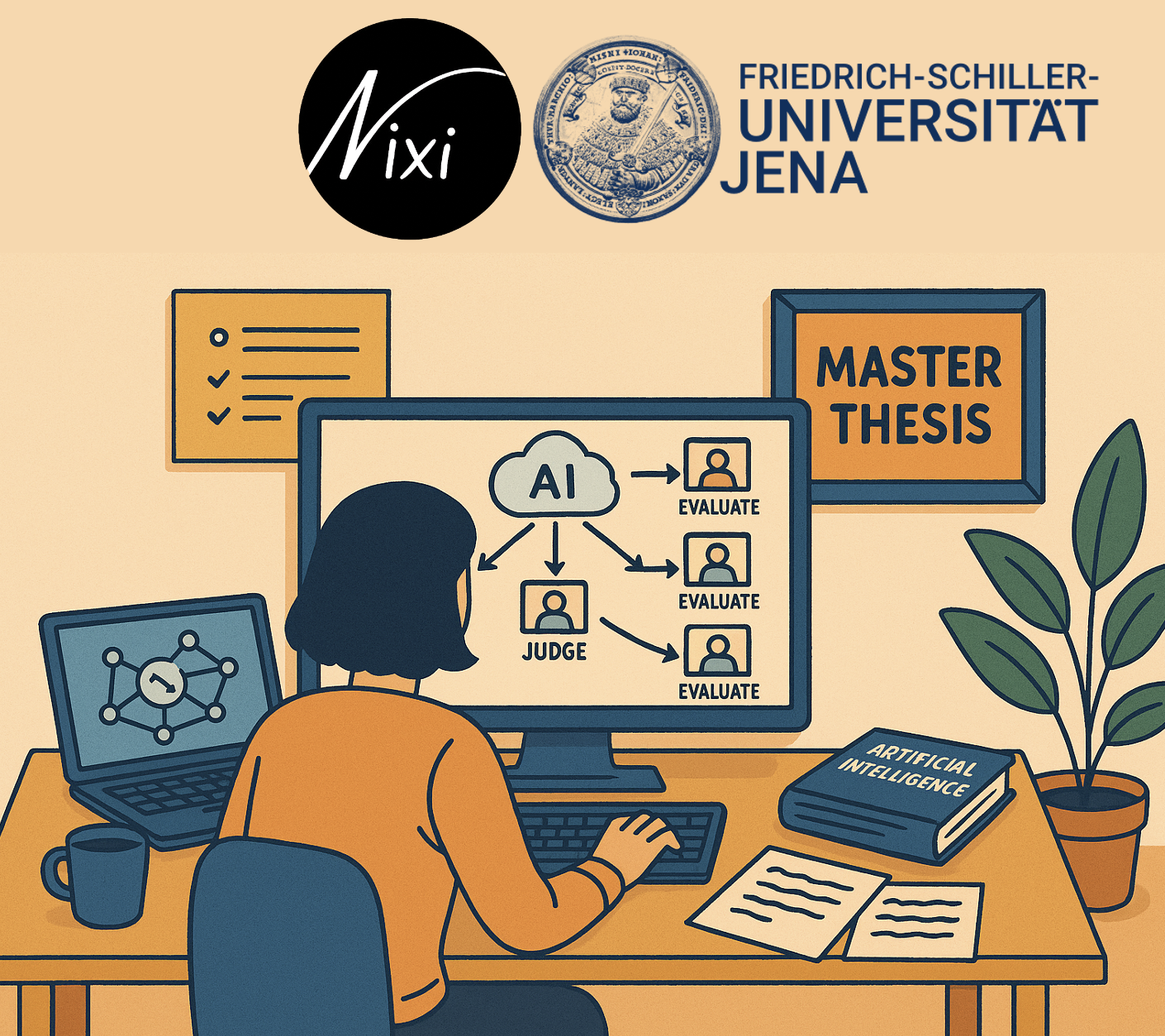

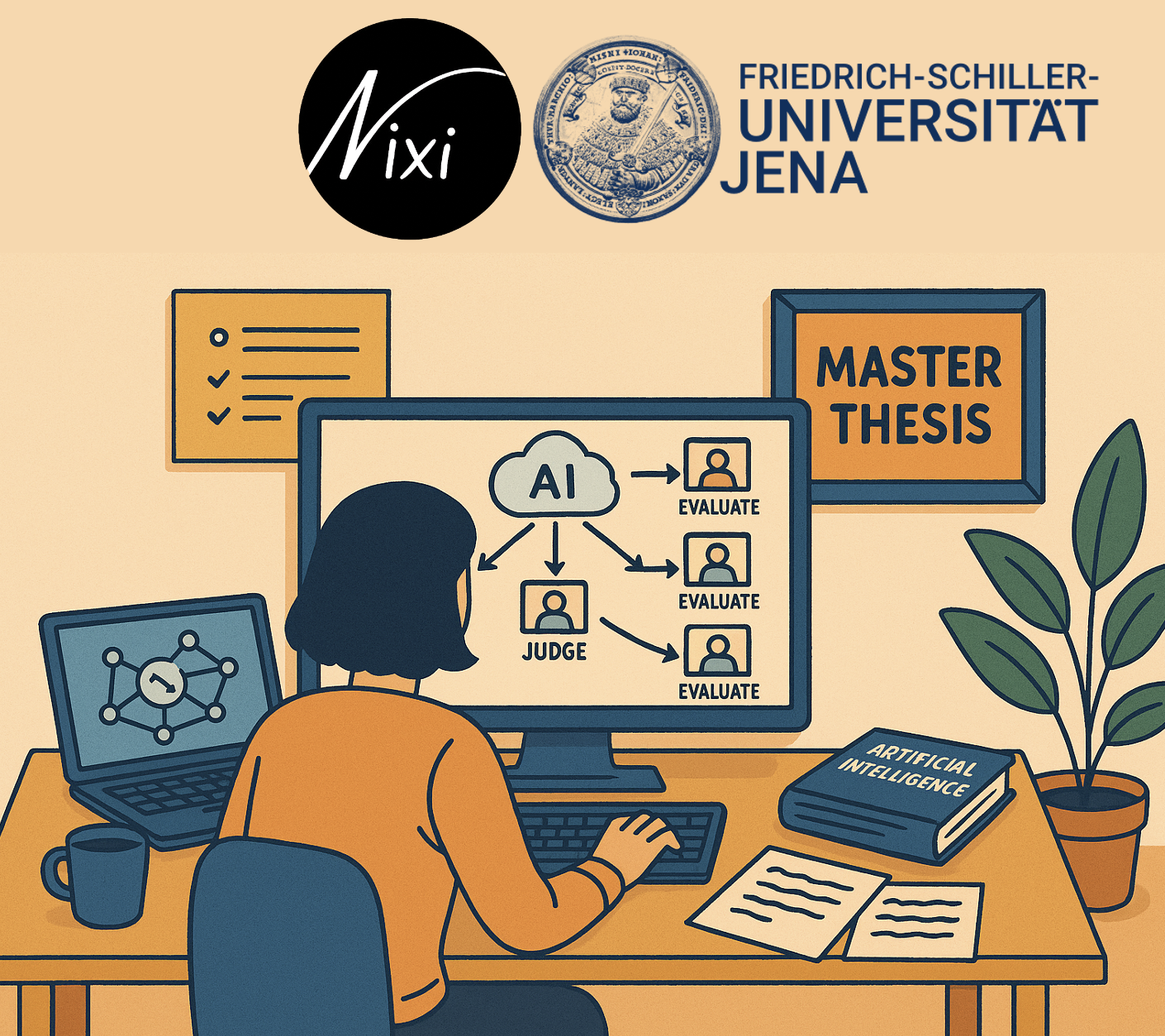

Evaluation of Multi-Agent Retrieval-Augmented Generation (RAG) Systems Without Ground Truth

Evaluation of Multi-Agent Retrieval-Augmented Generation (RAG) Systems Without Ground Truth

Your Mission

You will:

- Build a multi-agent RAG in Python, orchestrating LLM agent calls.

- Design evaluation rubrics and structured outputs.

- Conduct prompt tuning and calibration experiments (along with creating synthetic GT data).

- Optionally, wrap components in a chatbot interface for live test interactions.

Why This Role Is Unique

Work in a dynamic, international, and interdisciplinary environment in the beautiful city of Jena

Your work directly shapes product, positioning & growth

Work side‑by‑side with the founding team on all aspects—from pitch decks to pilot support

Flexible working hours and a family-friendly working environment

Friedrich Schiller University is a traditional university with a strong research profile rooted in the heart of Germany. As a university covering all disciplines, it offers a wide range of subjects. Its research is focused on the areas Light—Life—Liberty. It is closely networked with non-research institutions, research companies and renowned cultural institutions. With around 18,000 students and more than 8,600 employees, the university plays a major role in shaping Jena’s character as a cosmopolitan and future-oriented city.

The Project

The position is affiliated with the FUSION group of Univ. Prof. Dr Birgitta König-Ries, at Friedrich Schiller University Jena and in collaboration with the Nixi AI. The FUSION group is highly interdisciplinary and diverse, working towards building better biodiversity platforms and tools. More information about FUSION. Nixi AI is a health tech startup which is an all-in-one platform for AI-powered medical documentation, billing, and decision support.The project focuses on building and validating a multi-stage RAG (Retrieval‑Augmented Generation) pipeline without relying on any pre-existing ground truth data. It will explore two complementary evaluation methods: generating synthetic question-answer pairs with LLMs to serve as pseudo-ground truth, and employing an “LLM-as-a-judge” approach, where one or more LLMs assess the relevance, factual accuracy, and fluency of the pipeline’s outputs based on carefully designed prompts. A primary objective is to produce a confidence score for each generated answer, enabling end users to trust the system’s responses. The evaluation framework will be implemented in Python, integrating judge agents and synthetic benchmarks, and will be calibrated and validated through human spot-checks and statistical analyses to ensure the calibration of confidence and reliability of judgments.

Who Thrives Here

- Student in the fields of computer science, mathematics or any comparable degree

- Experience with the Python programming language

- Prior knowledge of working with LLMs, Langchain, Langgraph, and Huggingface is a plus

- Capable of working independently

- Willingness to learn new skills and technologies

- Fluency in English and good communication skills